Big O Notations

the way I understand algorithm complexity

10 Dec 2016

View Comments

#computer

#complexity

#big

#algorithm

Classify algorithms by the input size (n)

Big O notation is probably the most widely used method to describe the code complexity. When I say complexity, I mean the time and (memory/disk) space required to execute certain algorithm assuming that you are given with a very large set (n). I guess you can refer to complexity as a performance of the code. There are different notations to describe the complexity of the algorithm. Let me walk you through from fastest to slowest notations one by one. Hopefully, you could understand better this way. Below Big O notations are ordered from fastest to slowest complexity.

O(1)

Big O(1) means the time to execute such algorithm is basically instantaneous. The time it took to execute the algorithm with 1 input vs 1 million input is the pretty much the same for time complexity. A good example of Big O(1) would be a look up in a HashSet. Say you have 1 item in a set called a=>1, a being the key. Now, we can look up the value 1, via asking the set for a key which is instantaneous. Same is true even if you had a million rows. Now you have a million rows of a=>1, b=>2, c=>3 … million. Then I want to know the value that belongs to a key. The time that takes to fetch a from 1 item vs million items is the same. This is possible with a good hashcode which makes this look up instantaneous for the set. I won’t go into details of Hash Function, but it is a good read. Anyway, the runtime complexity is O(1) even on a million records. Therefore, we say runtime complexity of overall set lookup is O(1). However, if you think about space complexity for the set, we ask, how much space did you need to create that 1 item? One (unit of set storage). How much space did you need for creating 1 million items? 1 million (unit of set storage). So space complexity is varied on the input size. Therefore, we say space complexity for a HashSet is O(n). I hope this gave a good overview of what it is meant as an O(1) algorithm.

O(log n) - Logarithmic Time

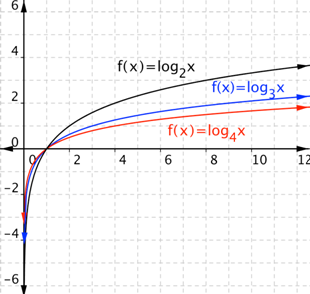

This notation is usually used for divide and conquer algorithms such as Binary Search. You first need to understand why log of n. It actually logs base 2 of n. Well, if you dig into a bit further, log n just means how many exponent of 2 are there for number n. For example, if you have 2x2x2 = 8. Log base2 of 8 = 3 since there are three 2s to make up for 8. It’s basically measuring how many times do we need to divide the number n to get to the answer? So if we take a binary search example, say we have sorted elements 1,3,5,7,9,11. Now we would like to search 3. What do we do? The first algorithm takes 1/2 of the elements and finds its 7. Since 7 is greater than 3, we ignore the last half and search in the first half. We divide the first half into another half and find that 3 is there, done. It took 2 divides to find the element 3 out of 6 elements. If we set the input large like 1 million, finding an element won’t be more than log base2 of 1000000, which is less than 20 times of search to find one element. This is very impressive. See the graph left for a better representation of the effectiveness of this algorithm:

O(n) - Linear Time

Linear time algorithm means that in the worst case, you are to look at every element until the element is found. Remember, in previous binary search example, we needed a sorted array to be able to cut out the half of the array. For linear time algorithm, we do not care since we are going to look at every element anyway. Normally, a plain for loop generally means O(n) algorithm. See example below:

private boolean containsValue(List<String> elements, String value) {

for (String element : elements) {

if (element.equals(value)) return true;

}

return false;

}Above example will go through every elements to find element. If elements do not have the element, it will have to loop all the items in elements to return false.

O(nlogn)

nlogn is basically O(n * logn). Typically, sorting takes O(nlogn). For divide and conquer, it is O(logn), but to merge to come up with sorted elements, you need to go through every item which takes O(n). Therefore, O(nlogn) altogether. 2 of the most famous sorting method that pro`duces time complexity of O(nlogn) are heapsort and merge sort. Although O(nlogn) does not have significant differences between O(n). There are certainly differences in terms of the performance. See the graph left which compares between O(n) vs O(nlogn). I would like you to see the code representation below which is O(nlogn). I think it’s a very simple code example that gives you a good picture of what is O(nlogn):

for (int i : N) {

doSomething(); // which takes O(logN)

}Here you see a loop which is O(n) however, you are running doSomething which takes O(logn) for O(n) times. So all together, it is O(nlogn).

O(\(n^2\)) - Quadratic Time

Quadratic Time means it took n * n amount of work to finish the job. Simple sorting like bubble sort, selection sort or insertion sort all takes O(\(n^2\)) time. It is probably best to show you what is O(\(n^2\)) time by providing you with a simple code example like below:

private boolean containsDuplicates(List<String> input) {

for (var i = 0; i < input.size(); i++) {

for (var j = 0; j < input.size(); j++) {

if (input.get(outer).equals(input.get(inner)) return true;

}

}

return false;

}Above example is just to illustrate what the quadratic time algorithm look like. containsDuplicates function itself can be much more optimized by using a Set or by sorting etc. When you have a double loop without a good reason, it usually is bad. It is best to avoid if you have an alternate solution like the example above.

O(\(2^n\)) - Exponential Time

Here is even slower algorithm than quadratic time. Exponential algorithm is usually not appropriate for practical use. An example of this algorithm would be Towers of the Hanoi. As you see from the code in the link, when input size is 3, a code is called 8 times by recursion. Now with larger input, the # of calls will increase \(2^n\).

O(n!) - Factorial Time

Factorial algorithm is even worse than the exponential function. Whenever n increases by 1, the running time increases by a factor of n. Here is a very simple code snippet to describe factorial time algorithm:

private void nFacRuntimeFunc(int n) {

for(int i=0; i<n; i++) {

nFacRuntimeFunc(n-1);

}

}As you see code will run nFacRuntimeFunc(n-1), n times. This algorithm reminds me of a movie, inception ![]() . You can see that this will go deeper as one is being analyzed.

. You can see that this will go deeper as one is being analyzed.

Conclusion

It is usually reasonable to write code within O(n) ranges. However, there are certainly times where you need to stretch to a slower algorithm. Choosing the right complexity is crucial, especially on a performance sensitive task. These days “space” is not really too much of a concern. However, if space is limited sometimes you would suffer time complexity to manage the program within the space. Big O notations are a great measure of how efficient your code is. You should measure time complexity analysis anytime running fast is somewhat needed for your code.The Big O Cheat Sheet did an awesome job summarizing the time complexities for a common data structures by operations with a graphical representation. You should go check it out. Also check out this simple pdf